President Biden Signs Executive Order Introducing New Regulatory Framework for AI-Enabled Technology in Healthcare

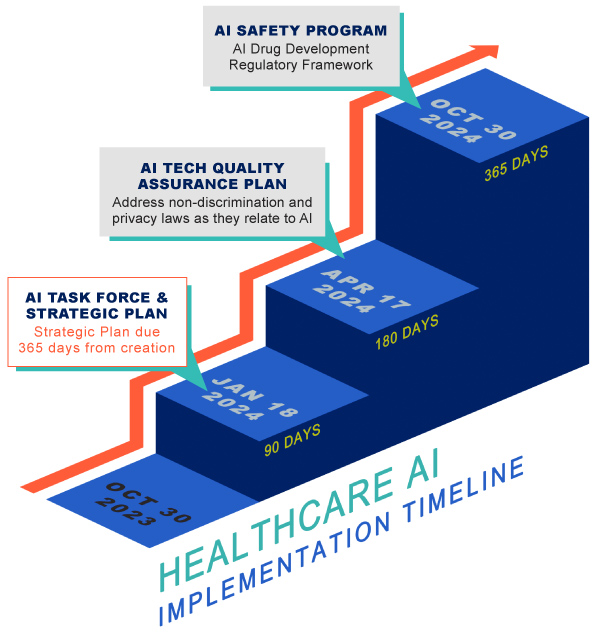

On October 30, 2023, President Biden signed an executive order establishing a roadmap for the regulation of artificial intelligence (“AI”) in various industries, including healthcare. This is the first clear signal of a comprehensive government policy to address the numerous legal and regulatory implications surrounding AI-enabled technology in the healthcare industry, including diagnostics, drug and medical device development, personalized care delivery, and patient monitoring. Immediately following the signing of the executive order, on November 3, 2023, the Office of Management and Budget (“OMB”) released for comment a draft policy, which proposes to establish new agency requirements in key areas, including AI governance, innovation and risk management and further directs agencies to adopt specific risk management practices for AI uses that impact public safety.1

The executive order directs the Department of Health & Human Services (“HHS”) to:

- establish an AI Task Force charged with developing a strategic plan for responsible deployment and use of AI in the health and human services sector (including research and discovery, drug and device safety, healthcare delivery and financing, and public health);

- develop an AI technology quality assurance policy to evaluate important aspects of the performance of AI-enabled healthcare tools;

- advance the compliance of federal nondiscrimination laws by health and human services providers that receive federal financial assistance;

- create an AI safety program that establishes a common framework for approaches to identifying and capturing clinical errors resulting from AI deployed in healthcare settings; and

- develop a strategy for regulating the use of AI or AI-enabled tools in drug development processes.

Takeaways

Stakeholders should expect AI-related guidance to be forthcoming from the HHS, the Food and Drug Administration (“FDA”), and other governmental agencies in the coming months, as the executive order specifies an ambitious implementation timeline for the Secretary of HHS as set out below.

1. Responsible Development and Deployment of AI and AI-Based Technologies

The HHS has a year to develop policies and frameworks — possibly including regulatory action, as appropriate — for responsible deployment and use of AI and AI-enabled technologies in the health and human services sector. Despite the lack of formal AI-specific regulation and guidance, many stakeholders have implemented policies addressing the ethical use of AI and AI-enabled technologies (to address obligations under existing regulations such as the Health Insurance Portability and Accountability Act (HIPAA) and state privacy laws), which lay the foundation for any organizational policies and procedures that the HHS may require under the executive order. Related topics may include:

- Incorporating human oversight and stakeholder collaboration in designing AI and AI-enabled technologies;

- Developing AI systems with diverse perspectives, including by reviewing and accessing underlying datasets to mitigate risks of discrimination and harmful bias;

- Emphasizing safety, privacy and security standards into the software development life cycle;

- Designing AI systems to be interpretable, allowing humans to understand their operations and the meaning and limitation of their outputs; and

- Documenting design decisions, development protocols and alignment with responsible AI design.

2. Quality Assurance Policy

The HHS is tasked under the Executive Order with establishing a strategy to maintain quality, including premarket assessment and postmarket oversight of AI-enabled healthcare technology performance against real-world data.

3. Medical Devices

To date, the FDA has issued guidance on AI-based software as a medical device and draft guidance on updating AI/machine learning (“ML”)-enabled medical device software functions. Many existing AI/ML-enabled medical devices have been approved or cleared by the FDA, primarily in radiology but also in other areas, including cardiology, neurology, hematology, gastroenterology/urology, anesthesiology, and ear, nose and throat. In response to the executive order, we anticipate the FDA may further clarify existing regulations/guidance and finalize draft guidance to address the following open questions such as:

- Will postmarket assessment of AI-enabled healthcare technology require additional measures beyond the FDA’s existing medical device reporting mechanisms?

- How will quality standards impact overall product development requirements for products distributed within and outside the U.S.?

- What transparency and reporting requirements will be required to address trends or propose changes that may be necessary in light of postmarket safety issues or other real-world data?

4. Health Information Technology

Though less progress has been made in adapting accepted AI standards in other areas of healthcare delivery, such as the use of AI for billing and coding, practitioner documentation, or scheduling and records management, the executive order may push regulators to focus on these areas. Key topics may include:

- The accuracy and reliability of coding data for AI-enabled billing and coding tools;

- Accurate recording or speech recognition, distinguishing multiple speakers, and the ability to translate clinical reasoning and adopt sound documentation practices related to proper documentation and accurate claim submission;

- Processes to oversee and measure impact on patient care and patient satisfaction; and

- Monitoring and oversight of patient scheduling and access to care.

5. Privacy and Nondiscrimination

The executive order makes numerous references to individuals’ privacy with respect to AI-enabled technology and explicitly calls for Congress to advance comprehensive privacy legislation. Additionally, the executive order underscores the importance of reducing biases and avoiding discrimination in AI-enabled technology. In addition to the ones noted above regarding responsible development and use of AI and AI-enabled technologies, some key takeaways include:

- Recognizing that AI can draw inferences about health conditions, outcomes and services from various types of data (e.g., see our recent Golden Flag Alert on Washington’s My Health My Data Act); and

- Identifying biases and potential discrimination in AI-enabled technologies.

6. AI Safety

The executive order underscores the importance of monitoring AI-enabled technologies for potential harm. The government’s central repository may coincide with mandatory reporting obligations or penalties and corrective actions for independently reported incidents of harm.

7. Drug Development Framework

The drug development process includes a wide range of activities from clinical research to product manufacturing to postmarket surveillance, all of which are subject to an established regulatory regime, in addition to FDA guidance. Key questions include whether the required regulatory framework will include a set of proposed regulations for comment and how the drug development framework will align with or overlap existing FDA efforts around AI.

In May 2023, the FDA published a discussion paper laying out current uses and considerations for various aspects of AI/ML in the drug development lifecycle. Many of the key questions and issues raised by the FDA may be relevant to HHS’s drug development mandate. It is unclear from the executive order how or whether any boundaries will be drawn with respect to existing FDA policies and guidance. Some of the key issues for those involved in the drug development process include:

- Supplementing or replacing existing standards for FDA-cleared or -approved products that currently incorporate AI;

- Overall ethical considerations;

- The appropriate level of information to evaluate AI model logic and performance;

- The testing and validation standards required to ensure data quality, reliability, reproducibility and accuracy across drug development; and

- Incorporating open-source and real-world data into AI model development and the appropriate level of documentation related to data source selection, inclusion and exclusion.

1. 88 Fed. Reg. 75625 (Nov. 3, 2023). The full text of the draft memorandum is available for review at https://www.ai.gov/input and https://www.regulations.gov. ↩